Download Zip Files In R

Download and Unzip Newspaper Files Using R

Download a set of newspaper files from the British Library's open repository

Most of the rest of this book uses newspaper data from the British Library's Heritage Made Digital Project. This project is digitising a number of nineteenth-century newspaper titles, and making the underlying data (essentially the text) openly available.

The titles released so far are available on the British Library's research repository here: https://bl.iro.bl.uk/collection/353c908d-b495-4413-b047-87236d2573e3, and more will be added with time.

The repository is divided into collections and datasets. The British Library 'newspapers collection' contains a series of datasets, each of which contains a number of files. Each of the files is a zip file containing all the METS/ALTO .xml for a single year of that title.

The first part of this tutorial will show you how to bulk download all or some of the available titles. This was heavily inspired by the method found here. If you want to bulk download titles using a much more robust method (using the command line, so no need for R), then I really recommend checking out that repository.

To do this there are three basic steps:

- Make a list of all the links in the repository collection for each title

- Go into each of those title pages, and get a list of all links for each zip file download.

- Optionally, you can specify which titles you'd like to download, or even which year.

- Download all the relevant files to your machine.

First, load the libraries needed:

## ── Attaching packages ─────────────────────────────────────── tidyverse 1.3.0 ── ## ✓ ggplot2 3.3.3 ✓ purrr 0.3.4 ## ✓ tibble 3.1.5 ✓ dplyr 1.0.6 ## ✓ tidyr 1.1.3 ✓ stringr 1.4.0 ## ✓ readr 1.4.0 ✓ forcats 0.5.0 ## ── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ── ## x dplyr::filter() masks stats::filter() ## x dplyr::lag() masks stats::lag() library(XML) library(xml2) library(rvest) ## ## Attaching package: 'rvest' ## The following object is masked from 'package:XML': ## ## xml ## The following object is masked from 'package:purrr': ## ## pluck ## The following object is masked from 'package:readr': ## ## guess_encoding Next, grab all the pages of the collection:

urls = paste0("https://bl.iro.bl.uk/collections/353c908d-b495-4413-b047-87236d2573e3?locale=en&page=",1 : 3) Use lapply to go through the list, use the function read_html to read the page into R, and store each as an item in a list:

list_of_pages <- lapply(urls, read_html) Next, write a function that takes a single html page (as downloaded with read_html), extracts the links and newspaper titles, and puts it into a dataframe.

make_df = function(x){ all_collections = x %>% html_nodes("p") %>% html_nodes('a') %>% html_attr('href') %>% paste0("https://bl.iro.bl.uk",.) all_collections_titles = x %>% html_nodes("p") %>% html_nodes('a') %>% html_text() all_collections_df = tibble(all_collections, all_collections_titles) %>% filter(str_detect(all_collections, "concern \\ /datasets")) all_collections_df } Run this function on the list of html pages. This will return a list of dataframes. Merge them into one with rbindlist from data.table.

l = map(list_of_pages, make_df) l = data.table:: rbindlist(l) l %>% knitr:: kable('html') | all_collections | all_collections_titles |

|---|---|

| https://bl.iro.bl.uk/concern/datasets/0a4f3f09-11ff-4360-a73e-ce3a7654f14c?locale=en | May's British and Irish Press Guide and Advertiser's Handbook & Dictionary etc. (1871-1880) |

| https://bl.iro.bl.uk/concern/datasets/020c22c4-d1ee-4fca-bf75-0420fe59347a?locale=en | The Newspaper Press Directory (1846-1880) |

| https://bl.iro.bl.uk/concern/datasets/93ec8ab4-3348-409c-bf6d-a9537156f654?locale=en | The Express |

| https://bl.iro.bl.uk/concern/datasets/2f70fbcd-9530-496a-903f-dfa4e7b20d3b?locale=en | The Press. |

| https://bl.iro.bl.uk/concern/datasets/dd9873cf-cba1-4160-b1f9-ccdab8eb6312?locale=en | The Star |

| https://bl.iro.bl.uk/concern/datasets/f3ecea7f-7efa-4191-94ab-e4523384c182?locale=en | National Register. |

| https://bl.iro.bl.uk/concern/datasets/551cdd7b-580d-472d-8efb-b7f05cf64a11?locale=en | The Statesman |

| https://bl.iro.bl.uk/concern/datasets/aef16a3c-53b6-4203-ac08-d102cb54f8fa?locale=en | The British Press; or, Morning Literary Advertiser |

| https://bl.iro.bl.uk/concern/datasets/b9a877b8-db7a-4e5f-afe6-28dc7d3ec988?locale=en | The Sun |

| https://bl.iro.bl.uk/concern/datasets/fb5e24e3-0ac9-4180-a1f4-268fc7d019c1?locale=en | The Liverpool Standard etc |

| https://bl.iro.bl.uk/concern/datasets/bacd53d6-86b7-4f8a-af31-0a12e8eaf6ee?locale=en | Colored News |

| https://bl.iro.bl.uk/concern/datasets/5243dccc-3fad-4a9e-a2c1-d07e750c46a6?locale=en | The Northern Daily Times etc |

| https://bl.iro.bl.uk/concern/datasets/7da47fac-a759-49e2-a95a-26d49004eba8?locale=en | British and Irish Newspapers |

Now we have a dataframe containing the url for each of the titles in the collection. The second stage is to go to each of these urls and extract the relevant download links.

Write another function. This takes a url, extracts all the links and IDs within it, and turns it into a dataframe. It then filters to just the relevant links (which have the ID 'file_download').

get_collection_links = function(c){ collection = c %>% read_html() links = collection%>% html_nodes('a') %>% html_attr('href') id = collection%>% html_nodes('a') %>% html_attr('id') text = collection%>% html_nodes('a') %>% html_attr('title') links_df = tibble(links, id, text) %>% filter(id == 'file_download') links_df } Use lappply to run this function on the column of urls from the previous step,and merge it with rbindlist. Keep just links which contain the text Download BLNewspapers.

t = lapply(l$all_collections, get_collection_links) t = t %>% data.table:: rbindlist() %>% filter(str_detect(text, "Download BLNewspapers")) The new dataframe needs a bit of tidying up. To use the download.file() function in R we need to also specify the full filename and location where we'd like the file to be put. At the moment the 'text' column is what we want but it needs some alterations. First, remove the 'Download' text from the beginning.

Next, separate the text into a series of columns, using either _ or . as the separator. Create a new 'filename' column which pastes the different bits of the text back together without the long code.

Add /newspapers/ to the beginning of the filename, so that the files can be downloaded into that folder.

t = t %>% mutate(text = str_remove(text,"Download ")) %>% separate(text, into = c('type', 'nid', 'title', 'year', 'code', 'ext'), sep = "_| \\ ." ) %>% mutate(filename = ifelse(! is.na(ext), paste0(type, "_", nid, "_" , title, "_", year, '.', ext), paste0(type, "_", nid, "_" , title, "_", year, '.', code))) ## Warning: Expected 6 pieces. Additional pieces discarded in 22 rows [91, 92, ## 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, ## 110, ...]. ## Warning: Expected 6 pieces. Missing pieces filled with `NA` in 103 rows [113, ## 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, ## 130, 131, 132, ...]. t = t %>% mutate(links = paste0("https://bl.iro.bl.uk",links )) %>% mutate(destination = paste0('/Users/Yann/Documents/non-Github/r-for-newspaper-data/newspapers/', filename)) The result is a dataframe which can be used to download either all or some of the files.

You can now filter this dataframe to produce a list of titles and/or years you're interested in. For example, if you just want all the newspapers for 1855:

files_of_interest = t %>% filter( as.numeric(year) == 1855) ## Warning in mask$eval_all_filter(dots, env_filter): NAs introduced by coercion files_of_interest%>% knitr:: kable('html') | links | id | type | nid | title | year | code | ext | filename | destination |

|---|---|---|---|---|---|---|---|---|---|

| https://bl.iro.bl.uk/downloads/aa8b9145-a7d9-4869-8f3e-07d864238ff0?locale=en | file_download | BLNewspapers | 0002642 | TheExpress | 1855 | aad58961-131c-416c-846f-be435bf63d38 | zip | BLNewspapers_0002642_TheExpress_1855.zip | /Users/Yann/Documents/non-Github/r-for-newspaper-data/newspapers/BLNewspapers_0002642_TheExpress_1855.zip |

| https://bl.iro.bl.uk/downloads/cee52757-0855-4cd0-9c49-f0e619f9b245?locale=en | file_download | BLNewspapers | 0002645 | ThePress | 1855 | 9e2e5f1b-3677-456a-8d43-6774a99c1928 | zip | BLNewspapers_0002645_ThePress_1855.zip | /Users/Yann/Documents/non-Github/r-for-newspaper-data/newspapers/BLNewspapers_0002645_ThePress_1855.zip |

| https://bl.iro.bl.uk/downloads/da6bd756-ff9a-475a-b1e9-94fbc8c01639?locale=en | file_download | BLNewspapers | 0002194 | TheSun | 1855 | zip | NA | BLNewspapers_0002194_TheSun_1855.zip | /Users/Yann/Documents/non-Github/r-for-newspaper-data/newspapers/BLNewspapers_0002194_TheSun_1855.zip |

| https://bl.iro.bl.uk/downloads/3184fc4c-8d01-41bc-8528-c08033d185d1?locale=en | file_download | BLNewspapers | 0002090 | TheLiverpoolStandardAndGeneralCommercialAdvertiser | 1855 | zip | NA | BLNewspapers_0002090_TheLiverpoolStandardAndGeneralCommercialAdvertiser_1855.zip | /Users/Yann/Documents/non-Github/r-for-newspaper-data/newspapers/BLNewspapers_0002090_TheLiverpoolStandardAndGeneralCommercialAdvertiser_1855.zip |

| https://bl.iro.bl.uk/downloads/b4e83547-fe78-41e2-8a31-3c47de4a7c03?locale=en | file_download | BLNewspapers | 0002244 | ColoredNews | 1855 | zip | NA | BLNewspapers_0002244_ColoredNews_1855.zip | /Users/Yann/Documents/non-Github/r-for-newspaper-data/newspapers/BLNewspapers_0002244_ColoredNews_1855.zip |

To download these we use the Map function, which will apply the function download.file to the vector of links, using the dest colum we created as the file destination. download.file by default times out after 100 seconds, but these downloads will take much longer. Increase this using options(timeout=9999).

Before this step, you'll need to create a new folder called 'newspapers', within the working directory of the R project.

options(timeout= 9999) Map(function(u, d) download.file(u, d, mode= "wb"), files_of_interest$links, files_of_interest$dest) Folder structure

Once these have downloaded, you can quickly unzip them using R. First it's worth understanding a little about the folder structure you'll see once they're unzipped.

Each file will have a filename like this:

BLNewspapers_TheSun_0002194_1850.zip

To break it down in parts:

BLNewspapers - this identifies the file as coming from the British Library

TheSun - this is the title of the newspaper, as found on the Library's catalogue.

0002194 - This is the NLP, a unique code given to each title. This code is also found on the Title-level list, in case you want to link the titles from the repository to that dataset.

1850 - The year.

Contruct a Corpus

Construct a 'corpus' of newspapers, using whatever criteria you see fit. Perhaps you're interested in a longitudinal study, and would like to download a small sample of years spread out over the century, or maybe you'd like to look at all the issues in a single newspaper, or perhaps all of a single year across a range of titles.

If you're using windows, you can use the method below to bulk extract the files. On mac, the files will unzip automatically, but they won't merge: the OS will duplicate the folder with a sequential number after it - so you might need to move years from these extra folders into the first NLP folder.

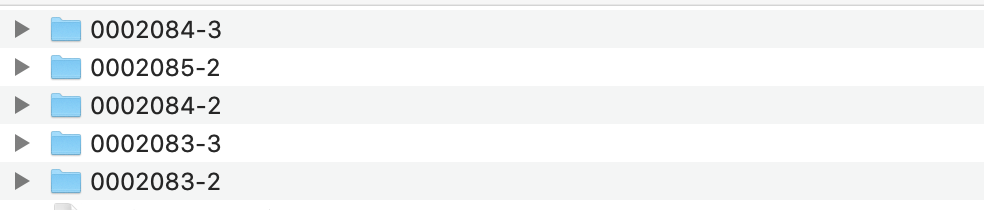

For example: if you download all the zip files from the link above, you'll have some extra folders named like the below:

You'll need to move the year folders within these to the folders named 0002083, and so forth.

Bulk extract the files using unzip() and a for() loop

R can be used to unzip the files in bulk, which is particularly useful if you have downloaded a large number of files. It's very simple, there's just two steps. This is useful if you're using windows and have a large number of files to unzip.

First, use list.files() to create a vector, called zipfile containing the full file paths to all the zip files in the 'newspapers' folder you've just created.

zipfiles = list.files("newspapers/", full.names = TRUE) zipfiles Now, use this in a loop with unzip().

Loops in R are very useful for automating simple tasks. The below takes each file named in the 'zipfiles' vector, and unzips it. It takes some time.

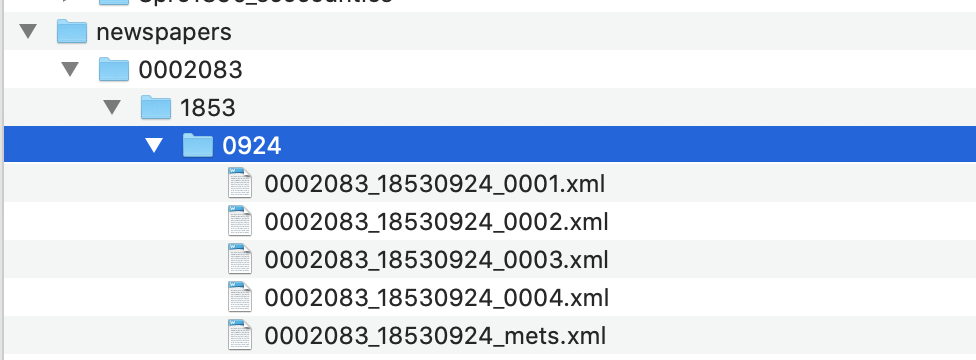

Once this is done, you'll have a new (or several new) folders in the project directory (not the newspapers directory). These are named using the NLP, so they should look like this in your project directory:

To tidy up, put these back into the newspapers folder, so you have the following:

Project folder-> newspapers ->group of NLP folders

You can also delete the compressed files, if you like.

The next step is to extract the full text and put it into .csv files

Source: https://bookdown.org/yann_ryan/r-for-newspaper-data/download-and-unzip-newspaper-files-using-r.html

Posted by: singleephase.blogspot.com